short stories

Mobile ordering rolls out at resort, Houston VC's latest investment, and more local innovation news

We're on the other side of the hill that is Houston's summer, but the Bayou City's still hot — especially in terms of innovation news, and there might be some headlines you may have missed.

In this roundup of short stories within Houston startups and tech, a Houston venture capital fund has made its latest investment, Houston startups share big updates, and more.

Rivalry Technology rolls out mobile ordering at hot summer spot

You can now order poolside at this Houston-area resort. Image courtesy of Rivalry Tech

Lounging at Margaritaville Lake Resort at Lake Conroe was just made easier by Rivalry Tech, a Houston-based mobile ordering platform company. Rivalry Tech upgraded poolside ordering with its myEATz. According to a news release, customers can now order food and drinks from the 5 o’Clock Somewhere Bar and Lone Palm Bar via a custom QR code system for each lounge chair and table to increase operational efficiency for the Margaritaville Lake Resort staff.

“We wanted to be sure the rollout of the myEATz mobile ordering platform was helpful to the Margaritaville staff, not a hindrance to their existing process. We created custom QR codes and a color coded map to easily identify where the mobile orders are going,” says Charles Willis, COO of Rivalry Tech, in the release.

Rivalry, which provides mobile ordering at numerous sports stadiums and venues with sEATz, expanded into hospitality this year.

“The Rivalry Tech team helped us to seamlessly implement mobile ordering at Margaritaville Lake Resort. They created the marketing materials, established custom QR codes, uploaded mentors and trained our staff onsite. The whole process has been easy and collaborative,” says Amit Sen, director food and beverage for Margaritaville Lake Resort, in the release.

Mercury Fund invests in ReturnLogic's latest round

Mercury has led the latest fundraising round from a SaaS company. Image via Getty Images

Houston-based venture capital firm Mercury led Phillidelphia-based SaaS company ReturnLogic's $8.5 million series A funding round, which also had participation from Revolution’s Rise of the Rest Fund, White Rose Ventures, and Ben Franklin Technology Partners. The fresh funding will help the company double its workforce, accelerate product development, and expand Application Programming Interface capabilities, according to a news release.

Founded by CEO Peter Sobotta, Return Logic's SaaS platform, which can be plugged into existing e-commerce platforms, helps to enhance management of returns and prevent the challenging financial impacts of returns.

“While retailers have largely mastered forward logistics to get products into customers hands, the returns process remains an under-addressed, resource-draining problem that eats away at brands’ profits,” says Blair Garrou, managing director of Mercury, in a news release. “ReturnLogic is something entirely new to this market and uniquely built on Peter Sobotta’s deep operational experience in reverse logistics and supply chain management.

"While serving in the U.S. Navy, Peter specialized in reverse logistics and gained extensive expertise in ecommerce operations," Garrou continues. "With Peter at the helm, ReturnLogic’s innovative API-first returns solution is well-positioned to tackle the ever-growing operational returns problem facing retailers. We are excited to partner with Peter and his team as they continue to solve this massive problem for online retailers.”

Fluence Analytics named a top advanced manufacturing startup

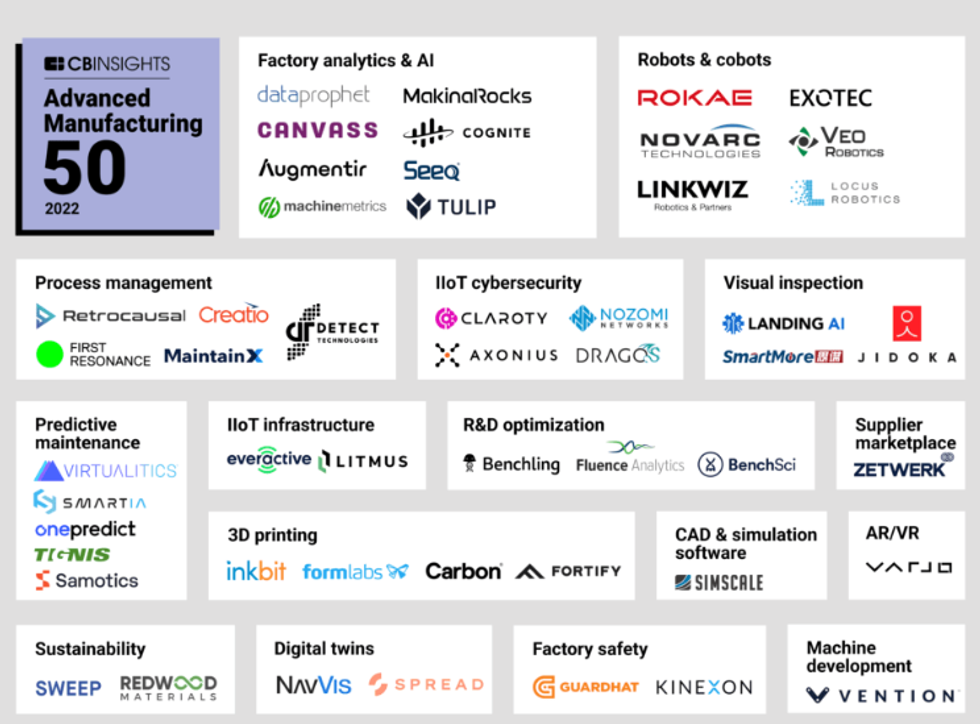

Fluence Analytics was selected as one of 50 startups recognized. Graphic courtesy

Fluence Analytics, an analytics and process control solutions platform for the polymer and biopharmaceutical industries, was named as a Top 50 global advanced manufacturing startup by CB Insights. The Inaugural list breaks down 16 different cohorts, narrowed down from more than 6,000 companies who either submitted an application or were nominated. Fluence Analytics was one of three companies featured in the R&D Optimization category.

"Our team is very excited that our real-time process analytics, optimization and control products for the polymer and biopharma industries are included among such elite startups," says Jay Manouchehri, CEO of Fluence Analytics, in a statement to InnovationMap. "We wish to thank CB Insights for including Fluence Analytics in its inaugural list of the Top 50 global advanced manufacturing startups, as well as our customers and investors for supporting the development and roll-out of our transformative technology solutions."

Fluence Analytics moved to the Houston area from New Orleans last year. The company's tech platform allows for optimization and control products to polymer and biopharmaceutical customers worldwide.

HTX Labs secures $1.7M contract to expand within United States Air Force

HTX Labs' EMPACT product will be further developed to support the Air Force. Image courtesy of HTX Labs

HTX Labs, a Houston-based company that designs extended reality training for military and business purposes, that it has been awarded a $1.7 million Small Business Innovation Research Phase II Tactical Funding Increase with the US Air Force to enhance and operationalize to its product, EMPACT Immersive Learning Platform, in support of training modernization.

“We are very thankful to AFWERX and AFDT for this great opportunity to play an increasingly important role in helping the USAF accelerate training modernization," says Chris Verret, president HTX Labs, in a news release. "This TACFI award shows continued confidence in HTX Labs, with a strong commitment to accelerate usage and adoption of EMPACT.”

HTX Labs will leverage this contract to expand EMPACT's ability to rapidly create and distribute interactive, immersive training, collaborating closely with Advanced Force Development Technologies, per the release.

OpenStax to publish free edition of updated science textbook

OpenStax is growing its access to free online textbooks. Image via openstax.org

OpenStax, a tech initiative from Rice University that uploads free learning resources, has announced it will publish the 10th edition of an organic chemistry textbook by Cornell University professor emeritus John McMurry.

“This is a watershed moment for OpenStax and the open educational resources (OER) movement,” says Richard Baraniuk, founder and director of OpenStax, in a news release. “This publication will quickly provide a free, openly licensed, high-quality resource to hundreds of thousands of students in the U.S. taking organic chemistry, removing what can be a considerable cost and access barrier.”

Usually a big expense for organic chemistry students, McMurry, with the support of publisher Cengage, made the decision to offer the latest edition online as a tribute to his son, Peter McMurry, who died in 2019 after a long struggle with cystic fibrosis.

“If Peter were still alive, I have no doubt that he would want me to work on this 10th edition with a publisher that made the book free to students,” McMurry says in the release. “To make this possible, I am not receiving any payment for this book, and generous supporters have covered not only the production costs but have also made a donation of $500,000 to the Cystic Fibrosis Foundation to help find a cure for this terrible disease.”