Researchers have to write extremely specific papers that require higher-order thinking — will an intuitive AI program like OpenAI’s ChatGPT be able to imitate the vocabulary, grammar and most importantly, content, that a scientist or researcher would want to publish? And should it be able to?

University of Houston’s Executive Director of the Research Integrity and Oversight (RIO) Office, Kirstin Holzschuh, puts it this way: “Scientists are out-of-the box thinkers – which is why they are so important to advancements in so many areas. ChatGPT, even with improved filters or as it continues to evolve, will never be able to replace the critical and creative thinking we need in these disciplines.”

“A toy, not a tool”

The Atlantic published, “ChatGPT Is Dumber Than You Think,” with a subtitle advising readers to “Treat it like a toy, not a tool.” The author, Ian Bogost, indulged in the already tired troupe of asking ChatGPT to write about “ChatGPT in the style of Ian Bogost.” The unimaginative but overall passable introduction to his article was proof that, “any responses it generates are likely to be shallow and lacking in depth and insight.”

Bogost expressed qualms similar to those of Ezra Klein, the podcaster behind, “A Skeptical Take on the AI Revolution.” Klein and his guest, NYU psychology and neural science professor Gary Marcus, mostly questioned the reliability and truthfulness of the chatbot. Marcus calls the synthesizing of its databases and the “original” text it produces nothing more than “cut and paste” and “pastiche.” The algorithm used by the program has been likened to auto-completion, as well.

However, practical use cases are increasingly emerging, which blur the lines between technological novelty and professional utility. Whether writing working programming code or spitting out a rough draft of an essay, ChatGPT does have a formidable array of competencies. Even if just how competent it is remains to be seen. All this means that as researchers look for efficiencies in their work, ChatGPT and other AI tools will become increasingly appealing as they mature.

Pseudo-science and reproducibility

The Big Idea reached out to experts across the country to determine what might be the most pressing problems and what might be potential successes for research now that ChatGPT is readily accessible.

Holzschuh, stated that there are potential uses, but also potential misuses of ChatGPT in research: “AI’s usefulness in compiling research proposals or manuscripts is currently limited by the strength of its ability to differentiate true science from pseudo-science. From where does the bot pull its conclusions – peer-reviewed journals or internet ‘science’ with no basis in reproducibility?” It’s “likely a combination of both,” she says. Without clear attribution, ChatGPT is problematic as an information source.

Camille Nebeker is the Director of Research Ethics at University of California, San Diego, and a professor who specializes in human research ethics applied to emerging technologies. Nebeker agrees that because there is no way of citing the original sources that the chatbot is trained on, researchers need to be cautious about accepting the results it produces. That said, ChatGPT could help to avoid self-plagiarism, which could be a benefit to researchers. “With any use of technologies in research, whether they be chatbots or social media platforms or wearable sensors, researchers need to be aware of both the benefits and risks.”

Nebeker’s research team at UC San Diego is conducting research to examine the ethical, legal and social implications of digital health research, including studies that are using machine learning and artificial intelligence to advance human health and wellbeing.

Co-authorship

The conventional wisdom in academia is “when in doubt, cite your source.” ChatGPT even provides some language authors can use when acknowledging their use of the tool in their work: “The author generated this text in part with GPT-3, OpenAI’s large-scale language-generation model. Upon generating draft language, the author reviewed, edited, and revised the language to their own liking and takes ultimate responsibility for the content of this publication.” A short catchall statement in your paper will likely not pass muster.

Even when being as transparent as possible about how AI might be used in the course of research or in development of a manuscript, the question of authorship is still fraught. Holden Thorp, editor-in-chief of the Science, writes in Nature, that “we would not allow AI to be listed as an author on a paper we published, and use of AI-generated text without proper citation could be considered plagiarism.” Thorp went on to say that a co-author of an experiment must both consent to being a co-author and take responsibility for a study. “It’s really that second part on which the idea of giving an AI tool co-authorship really hits a roadblock,” Thorp said.

Informed consent

On NBC News, Camille Nebeker stated that she was concerned there was no informed consent given by the participants of a study that evaluated the use of a ChatGPT to support responses given to people using Koko, a mental health wellness program. ChatGPT wrote responses either in whole or in part to the participants seeking advice. “Informed consent is incredibly important for traditional research,” she said. If the company is not receiving federal money for the research, there isn’t requirement to obtain informed consent. “[Consent] is a cornerstone of ethical practices, but when you don’t have the requirement to do that, people could be involved in research without their consent, and that may compromise public trust in research.”

Nebeker went on to say that study information that is conveyed to a prospective research participant via the informed consent process may be improved with ChatGPT. For instance, understanding complex study information could be a barrier to informed consent and make voluntary participation in research more challenging. Research projects involve high-level vocabulary and comprehension, but informed consent is not valid if the participant can’t understand the risks, etc. “There is readability software, but it only rates the grade-level of the narrative, it does not rewrite any text for you,” Nebeker said. She believes that one could input an informed consent communication into ChatGPT and ask for it to be rewritten at a sixth to eighth grade level (which is the range that Institutional Review Boards prefer.)

Can it be used equitably?

Faculty from the Stanford Accelerator for Learning, like Victor Lee, are already strategizing ways for intuitive AI to be used. Says Lee, “We need the use of this technology to be ethical, equitable, and accountable.”

Stanford’s approach will involve scheduling listening sessions and other opportunities to gather expertise directly from educators as to how to strike an effective balance between the use of these innovative technologies and its academic mission.

The Big Idea

Perhaps to sum it up best, Holzschuh concluded her take on the matter with this thought: “I believe we must proceed with significant caution in any but the most basic endeavors related to research proposals and manuscripts at this point until bot filters significantly mature.”

------

This article originally appeared on the University of Houston's The Big Idea. Sarah Hill, the author of this piece, is the communications manager for the UH Division of Research.

Konect.ai's tech is enhancing communications in the automotive retail industry. Konect.ai

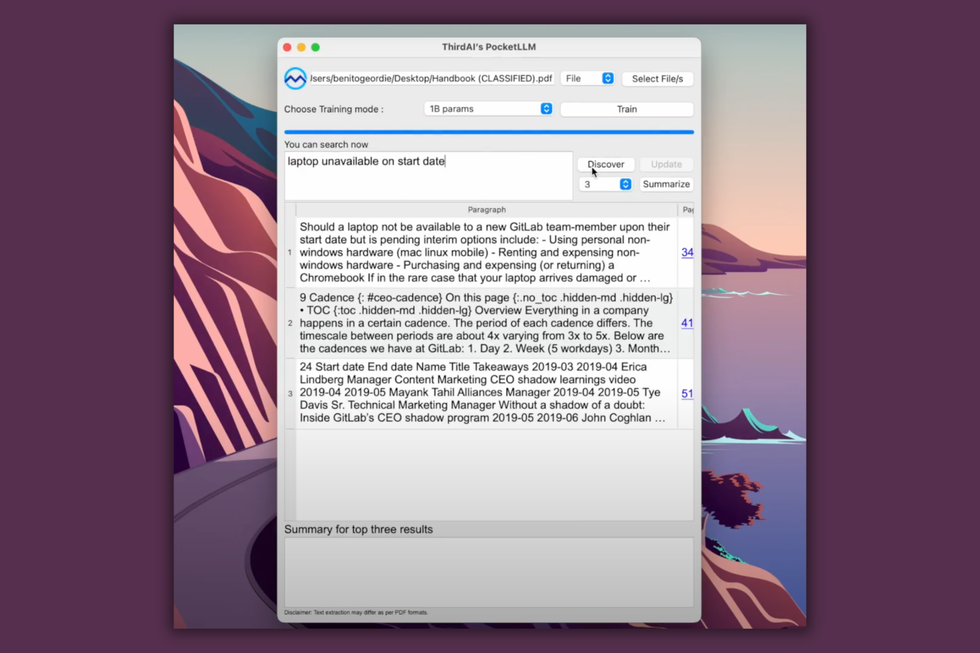

Konect.ai's tech is enhancing communications in the automotive retail industry. Konect.ai ThirdAI's PocketLLM app is free to use. Image courtesy of ThirdAI

ThirdAI's PocketLLM app is free to use. Image courtesy of ThirdAI Anshumali Shrivastava is an associate professor of computer science at Rice University. Photo via rice.edu

Anshumali Shrivastava is an associate professor of computer science at Rice University. Photo via rice.edu Apple doubles down on Houston with new production facility, training center Photo courtesy Apple.

Apple doubles down on Houston with new production facility, training center Photo courtesy Apple.