Houston researchers develop material to boost AI speed and cut energy use

ai research

A team of researchers at the University of Houston has developed an innovative thin-film material that they believe will make AI devices faster and more energy efficient.

AI data centers consume massive amounts of electricity and use large cooling systems to operate, adding a strain on overall energy consumption.

“AI has made our energy needs explode,” Alamgir Karim, Dow Chair and Welch Foundation Professor at the William A. Brookshire Department of Chemical and Biomolecular Engineering at UH, explained in a news release. “Many AI data centers employ vast cooling systems that consume large amounts of electricity to keep the thousands of servers with integrated circuit chips running optimally at low temperatures to maintain high data processing speed, have shorter response time and extend chip lifetime.”

In a report recently published in ACS Nano, Karim and a team of researchers introduced a specialized two-dimensional thin film dielectric, or electric insulator. The film, which does not store electricity, could be used to replace traditional, heat-generating components in integrated circuit chips, which are essential hardware powering AI.

The thinner film material aims to reduce the significant energy cost and heat produced by the high-performance computing necessary for AI.

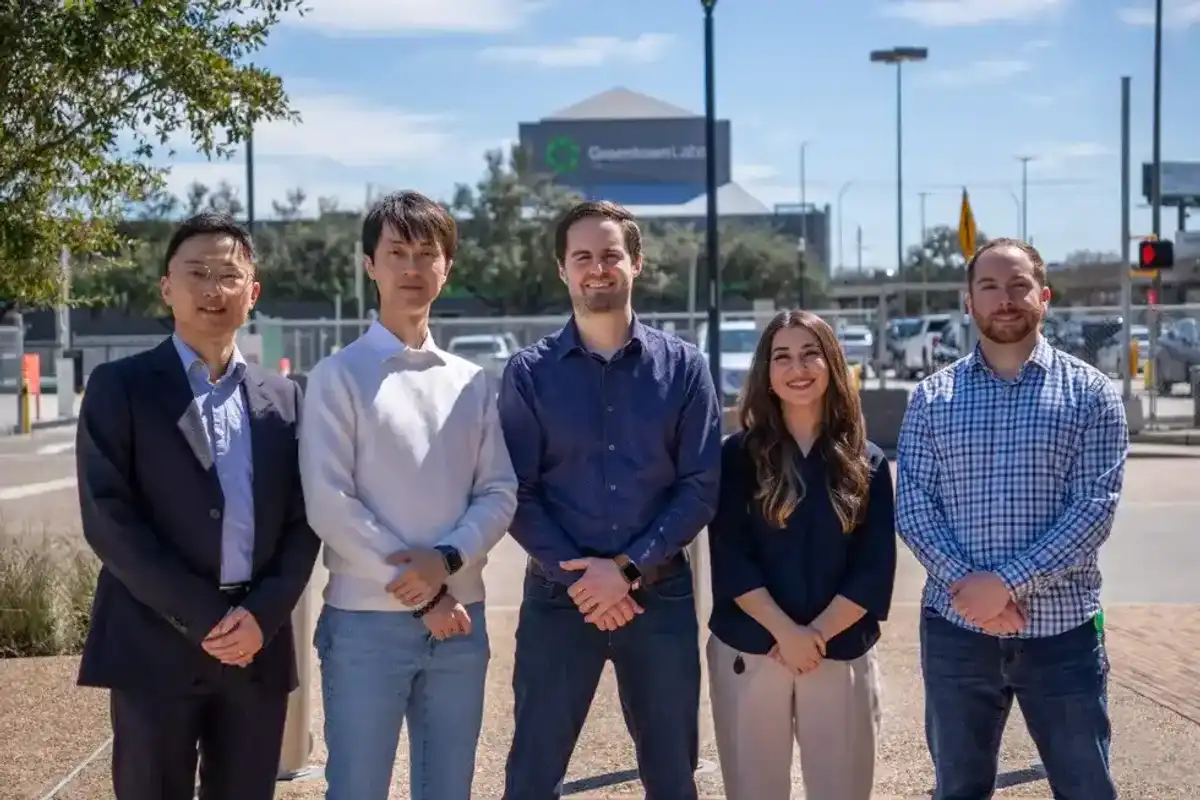

Karim and his former doctoral student, Maninderjeet Singh, used Nobel prize-winning organic framework materials to develop the film. Singh, now a postdoctoral researcher at Columbia University, developed the materials during his doctoral training at UH, along with Devin Shaffer, a UH professor of civil engineering, and doctoral student Erin Schroeder.

Their study shows that dielectrics with high permittivity (high-k) store more electrical energy and dissipate more energy as heat than those with low-k materials. Karim focused on low-k materials made from light elements, like carbon, that would allow chips to run cooler and faster.

The team then created new materials with carbon and other light elements, forming covalently bonded sheetlike films with highly porous crystalline structures using a process known as synthetic interfacial polymerization. Then they studied their electronic properties and applications in devices.

According to the report, the film was suitable for high-voltage, high-power devices while maintaining thermal stability at elevated operating temperatures.

“These next-generation materials are expected to boost the performance of AI and conventional electronics devices significantly,” Singh added in the release.

Apple doubles down on Houston with new production facility, training centerPhoto courtesy Apple.

Apple doubles down on Houston with new production facility, training centerPhoto courtesy Apple.